Generative AI Can Enhance Survey Interviews

Soubhik Barari

Zoe Slowinski

Natalie Wang

Jarret Angbazo

Brandon Sepulvado

Leah Christian

Elizabeth Dean

For inquiries, email:

November 2024

Integrating large language models (LLMs) into survey interviews shows early promise for improving response quality.

Generative artificial intelligence (gen AI) presents a promising opportunity to transform how web surveys are conducted. Although self-administered web surveys, the most common type of survey administered today, offer many benefits, they have inherent limitations, such as the inability to personalize questions based on a respondent's profile or to probe for more detailed responses to open-ended questions.

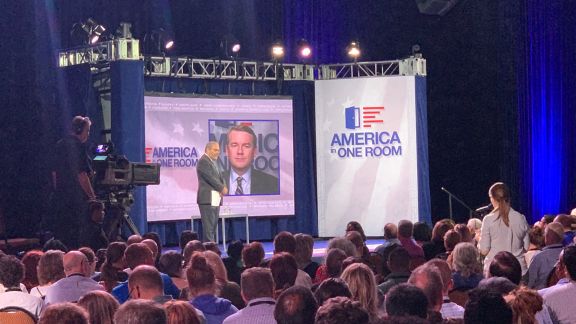

For example, if a respondent gives a brief or unclear answer to a question like “What do you think is the important issue facing the country today?”, a traditional web survey cannot engage in further probing to clarify or elaborate on specific aspects of the respondent’s answer (Figure 1).

In contrast, conversational interviewing, where interviewers can adapt questions in real time and probe for more detail, allows respondents to provide richer and more relevant answers. While this method can improve data quality, it is logistically challenging, resource-intensive, and technically difficult to scale in self-administered web surveys.

Figure 1

Conversational interview approaches can yield richer, more relevant responses in comparison to standardized interviews.

Study

We conducted a survey experiment in May of 2024 on a conversational AI platform, randomly assigning participants (n=1,200) to either (a) interact with a textbot performing elaboration and quality probing on selected open-ended questions in one condition or (b) no probing in another condition.

One of these questions, previously shown in Figure 1, asked respondents, “What do you think is the most important issue facing the country today?” We measured several outcomes related to response quality for each experimentally probed question and overall respondent experience with the survey. Open-ended responses were coded using indicators of high quality (relevance, specificity, and explanation) and low quality (incompleteness, incomprehensibility, and redundancy). Three trained human coders identified these six criteria through an inductive thematic analysis of open-ended responses and then coded a sample of open-ended responses using these indicators.

Findings

Our findings indicate that conversational probing can enhance response specificity and detail, even with minimal fine-tuning of the large language model to the specific context, goals, or exemplar responses of each question.

Figure 2 shows the gains in these quality criteria on the “most important issue” question for respondents in the conversational AI condition, the first question asked of all respondents across conditions in the survey. Notably, this improvement does not extend beyond specificity and explanatory detail to other positive qualities such as relevance, completeness, or comprehensibility. It is also impossible to disentangle whether the higher gains for the ‘most important issue’ question are due to its content or order in the survey, a consideration for future research.

Figure 2

Conversational AI probing on a web survey question can enhance some response quality outcomes but has no effect on others.

We also found a slightly negative impact on user experience for respondents in the conversational AI condition relative to the standardized interview, evidenced by lower self-reported evaluations and higher respondent attrition rates. Moreover, we found both negative effects on user experience and null effects on the response quality among mobile respondents. That is, the benefits of conversational AI appear to mostly apply to desktop survey respondents, while they may increase cognitive costs for mobile respondents.

Discussion

Overall, our investigation suggests that while AI textbots show promise in enhancing data quality, their effect on user experience, particularly for mobile users, warrants careful consideration and further optimization. We urge survey researchers to consider whether the gains in data quality are substantial enough to offset the risks and additional complexities incurred by generative AI.

Beyond these findings on specific outcomes, our evaluation yielded practical insights for integrating LLM textbots into surveys. Among them:

- Consider the user experience design (UXD) of the textbot integration, including the amount and placement of probing. Our results suggest that excessive probing early in the survey can increase respondent dropout. Therefore, our preliminary recommendation is to place question probes in the middle or end sections of a survey. Randomizing the placement of questions and the application of probing may help mitigate negative effects and estimate and control for the effects of the marginal probe on outcomes of interest to the researcher.

- Conducting a pilot study can help fine-tune the textbot LLM to each question by identifying cases where probing failed or should have been conducted. Researchers can then refine LLM prompts for individual questions as well as use exemplar responses to probes in the pilot sample to calibrate the LLM, either through few-shot learning where examples are inserted into future prompts or in supervised learning where a systematic dataset is used to re-train the model.

- Different types of probing strategies should be considered, depending on the specific goals of the study. For example, some research may benefit from probing for depth (asking respondents to explain why or provide details for existing examples) while other studies might optimize for depth (eliciting secondary response categories besides the “main” category cited by a respondent). Probing may also help with specificity to ensure information is collected in the categories of interest or with the desired level of granularity. Exploring sequences of prompts may also enhance the quality of responses.

The results of this study open several new avenues for future research. Beyond the outcomes we measured, impacts on response latency may provide further insights into how conversational AI may impact cognitive burden (e.g., increasing the time to respond) and survey experience (e.g., delays in the survey flow due to activation of the LLM). More rigorous tuning of the textbot LLM for each survey question could further enhance the outcomes observed in this evaluation.

While our study establishes a baseline performance based on “out-of-the-box” textbot capabilities, future studies could aim to determine the ceiling of AI capabilities through advanced techniques like prompt engineering and different training methods. Researchers must also better understand the costs of using textbots, given early signs of negative impacts on user experience, and find ways to mitigate them where possible.

Acknowledgements

Thanks to Nola du Toit and Josh Lerner for their support. Thanks also to Akari Oya and Skky Martin for their research assistance. Thanks to Jeff Dominitz for serving as an advisor to the project.

About NORC at the University of Chicago

NORC at the University of Chicago conducts research and analysis that decision-makers trust. As a nonpartisan research organization and a pioneer in measuring and understanding the world, we have studied almost every aspect of the human experience and every major news event for more than eight decades. Today, we partner with government, corporate, and nonprofit clients around the world to provide the objectivity and expertise necessary to inform the critical decisions facing society.

Contact: For more information, please contact Eric Young at NORC at young-eric@norc.org or (703) 217-6814 (cell).